You will generally start up javaNNS using the LINUX command:

If this doesn't work, take a look at my Getting Started with javaNNS page. If all is well, you should get an interface window looking something like:

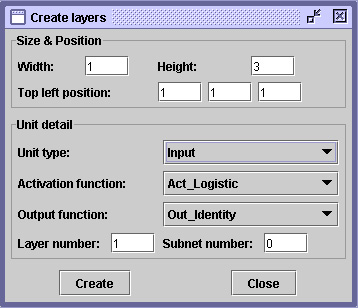

The first stage is to build your neural network. You start by getting the "Create layers" panel from the "Tools" pull down menu:

It is sensible to create your input, hidden and output layers in that order. The input units should be linear (Act_Identity) and the hidden layer should be sigmoidal (Act_Logistic). The ouput units are normally sigmoidal for classification problems, and linear for regression problems. Leave the output functions alone (Out_identity). "Height" and "Width" specify the number of units you require at each layer. The display is clearest if you have Width = 1 and the Height set to be the number of units you want. Leave the Layer and Subnet numbers to update themselves. Click on "Create" once the details of each layer is specified, and then move on to specify the next layer. Your units will appear in the display window.

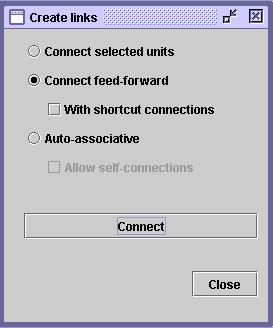

The next stage is to connect up the units. You do this by getting the "Create connections" panel from the "Tools" pull down menu:

You probably want to start with "Connect feed-forward" without short-cut connections. Clicking on "Connect" will set up the connections for you.

Next you need to load in your training, validation and testing data sets using the "Open" panel from the "Files" pull down menu. If you have existing correctly formatted data, they will generally have the file name extension ".pat".

[If you have new raw data, I find the easiest way to get it into the correct format is to copy one of the example data files and paste your data in the appropriate places.]

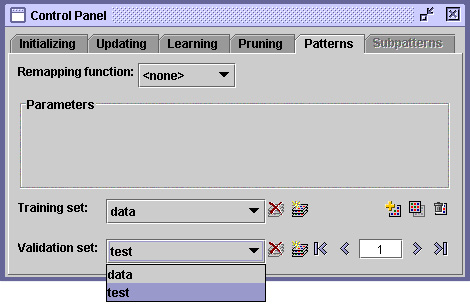

Your network is now ready to run. To control the network, open the "Control Panel" from the "Tools" pull down menu. Click on the "Patterns" tab to tell the system which file to train with and which to validate with:

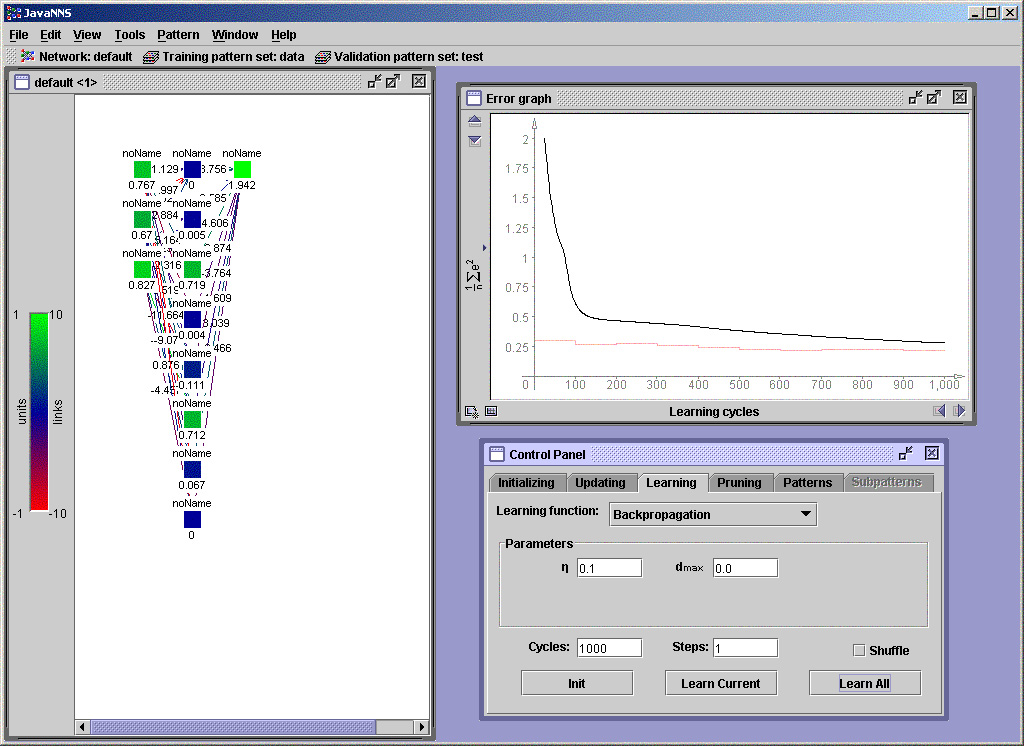

To see how well your network is doing during training, you can open the "Error graph" from the "View" pull down menu.

By clicking on the "Learning" tab in the "Control Panel", you can choose your learning algorithm and its parameters.

Some hints: It is generally a good idea to get a feel for how standard Backpropagation behaves before moving on to try more exotic learning algorithms. The default parameters are often a long way from the best parameters. If you don't know what a parameter (e.g. "dmax") does, then set it to zero!

The "Init" button sets the network weights to random values (from the range specified under the "Initializing" tab on the "Control Panel").

Finally, click on "Learn All" and watch your network learn!

Assuming something sensible happened, it is worth saving the details of your network to file using "Save" under the "File" pull down menu (with filename extension ".net"). This will allow you to reload your network using "Open" next time you log on, or if you mess it up and want to start again, or if you crash javaNNS (which is surprisingly easy). You will then only have to reload the training data and reset your parameters, rather that rebuild the whole network.

Now try different values for the learning parameters and numbers of hidden units, and see which values work best for your particular problem.

Another hint: See how much the results change simply by starting with different random initial weights. Does the variability depend on the learning parameters?

If you have got this far, you are now ready to explore the other functionality

of javaNNS by trial and error, or using the online help.